Speed, reliability and flexibility and maybe more

Today develop applications for Internet requires more speed, reliability and flexibility, these characteristics can be applied to all the stages of a project but we are going to focus on the deployment stage.

Configure a server can be easy but the things got harder when you need a replica of your instance or when in your project interacts more than one application maybe created in different languages and then application requires special configuration in the environment.

Infrastructure as a code attempts to solve this giving us the power to create environment resources with code improving the reliability of the project since we are documenting(with code) our deployment process, we only need to execute scripts to deploy increasing our speed and we got flexibility because we can alter the deploy just updating variables.

Ok, enough of boring concepts we are going to use Terraform from HashiCorp to create an EC2 instance as the first part of the infrastructure.

AWS manual configuration. Terraform user

Assuming that you have a AWS account you will need to log in an create a user for all the operations that Terraform is going to perform for us, more types of resources will require more permissions.

For this tutorial We are going to keep it simple and create our user with permissions for EC2, S3 and and DynamoDB.

In the AWS web console go to IAM service > Users > Add User fill the name for your user set the Access type as Programmatic access and click next.

In the next screen if you have not created groups create a new one, set a name and mark the permissions for the EC2, S3 and DynamoDB, assing the group to the new user and click on the review.

Finally, review your settings and click on Create User download the file with the credentials and store it in a safe location.

AWS manual configuration. Terraform state

The tool that we are using is Terraform, it works reading files with the specifications of our environment, Terraform interprets the instructions and creates the resources(EC2 instances, S3 buckets, etc) and it creates a state. This can be understand it as a map of our environment that Terraform will use to detect the changes on the configuration and apply the changes over our environment.

By default Terraform saves the state in our computer but if we are going to work with a team we should allow the access of the state with our team mates and one of the best ways to do this is store the state file in an S3 bucket where everyone with the correct credentials can access.

To do this go to S3 Service and click in Create a bucket, set the name for your bucket and create it with the default settings

AWS manual configuration. Terraform lock

Now we have a place to store the state of our environment and is shared it with our team mates but since now everyone will be able to deploy we don’t want to overwrite the environment configuration and that is why we need to lock the state.

According to the documentation and if we already are using S3 to store our state the best way to lock the state is using an DynamoDB table from AWS, the idea of use this combo is create a table where we are going to store a hash that will be read it by Terraform and if the state is locked it will suspend the operations.

One more time we need to go to AWS console look for the DynamoDB service, click on Create Table, set a name for our table and set LockID on the primary key id field, continue with the default settings and create the table.

Hands in the mud. Terraform project

We are going to use Docker to run Terraform commands because I don’t like to install anything in my computer besides this gave me the flexibility to update the versions whenever I want. I’m not covering the Docker setup because that is another topic and there are a lot of information on the Internet of how to set up Docker in your machine.

Once we have our externar resources we need to work on our work space.

First you have to create a folder to store all the Terraform files and inside of it the next files:

variables.tf

This files contains all the variables used in our deploy

# AWS Config

variable "aws_access_key" {}

variable "aws_secret_key" {}

variable "aws_region" { default = "us-west-2" }

Sometimes we can set a default value like the the region variable but for sensitive information like AWS keys the default will be nothing and we are going to take the values from the environment variable.

config.tf

This file have the configuration parameter for the environment

provider "aws" {

access_key = "${var.aws_access_key}"

secret_key = "${var.aws_secret_key}"

region = "${var.aws_region}"

version = "~> 1.7"

}

terraform {

backend "s3" {

bucket = "terraform-states-storage"

key = "terraform-tutorial/terraform.tfstate"

region = "us-west-2"

dynamodb_table = "terraform-states-lock-dynamo"

}

}

As you can see you in the provider object We are specifying that we are working with the AWS tools and taking the keys and region value from the variables.tf file, you don’t need to reference it Terraform will handle it for us.

In the second object we are specifying the properties we created manually in AWS, we can understand the instructions like: We are going to store the state in S3, in this bucket, in this specific location(key), the bucket will be in this region and we are going to use this table to lock the state, with this instructions now we initialize our infrastructure routine.

Hands in the mud. Terraform init

Once we have the basic configuration we need to initialize the project, we need to run the next command:

docker run -it --rm \

-v $(pwd):/data \

-w /data \

-e AWS_ACCESS_KEY=your_aws_access_key \

-e AWS_SECRET_ACCESS_KEY=your_aws_secret_key \

hashicorp/terraform:light init

As you can see we set the access keys to environment variables, the purpose of this is avoid to store any sensitive information in our project files, after run the command you will get an output like this:

Initializing the backend... Successfully configured the backend "s3"! Terraform will automatically use this backend unless the backend configuration changes. Initializing provider plugins... - Checking for available provider plugins on https://releases.hashicorp.com... - Downloading plugin for provider "aws" (1.36.0)... Terraform has been successfully initialized! You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work. If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary.

Congrats! This means we are ready to work on setup our infrastructure with code!

A little break. The AWS Command Line Interface

Amazon EC2 instances can run a very large variety of image, the first we need to do is chose one, for this tutorial we are going to use Ubuntu 18.04, Terraform requires a unique identifier given by the AWS CLI.

How or where do We get this Id? We need to take a little break to understand how the AWS CLI works and how we can get that information.

First of all you need to have installed your AWS CLI, I will not dig into it because you can refer to the documentation and install the version for your OS.

Let’s start with this command

aws ec2 describe-images --filters "Name=name,Values=ubuntu/images/hvm-ssd/ubuntu-bionic-18.04*" --query 'Images[*].{OID:OwnerId, N:Name}'

AWS CLI works using the first parameter as the main command and the second as sub-command, in this instruction we are referring to EC2 services and we are requesting the description of the images, that is why We are using the flag filters to specify we want to filter only the images with the name ubuntu/images/hvm-ssd/ubuntu-bionic-18.04 without worry about of the end of the name.

The command will return a lot of information and honestly we don’t need it, that is why we need the second flag where we can request only the Owner Id and the Name of the image.

You will get a response like this:

ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180613 099720109477 ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180823 099720109477 ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180814-3b73ef49-208f-47e1-8a6e-4ae768d8a333-ami-0b425589c7bb7663d.4 679593333241 ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180912 099720109477 ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180426.2 099720109477 ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180806 099720109477 ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180617 099720109477 ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180814 099720109477 ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180522 099720109477 ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20180724 099720109477

The first is the name of the image and the second is the Owner Id, as you can see is the same for all the images and this is because the owner for these images is Canonical.

If you need more information or you have questions about the syntax for the command you can always refer to the documentation for the sub-command describe images, we already have what we need.

The battlefield. The Datasource

The idea of Terraform is use code for all the commands that we run manually and as you can predict we need to translate the query we performed into something Terraform can understand, this will be called Datasource and we can understand this like a set of instructions to build a query and get information from AWS, in the future We can make a reference to this and access to the resources.

aws_ami.tf

data "aws_ami" "ubuntu" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

owners = ["099720109477"] # Canonical

}

We are creating an AWS AMI data source called ubuntu, as you can see We are translating the filter of the AWS CLI into something Terraform can understand filtering by name, virtualization, specifying the owner but we are requiring only the most recent version.

The battlefield. Raise your sword

Finally, time to create your instance!

main.tf

resource "aws_instance" "my-test-instance" {

ami = "${data.aws_ami.ubuntu.id}"

instance_type = "t2.micro"

tags {

Name = "test-instance"

Project = "terraform-tutorial"

}

}

This is going to be our main file where we are going to create an aws_instance resources called my-test-instance.

If you can observe the object is very clear, we use our previous aws_ami data source to get the id and set it as the ami for our instance, the type of the instance obviously is a t2.micro because this is a tutorial and we have a nested tags object where we can add tags to track our resources.

The battlefield. Yes, we are ready

Now we have the setup for everything we are going to explore two new Terraform commands:

Terraform: plan

This command is going to show us our “plan” it is like a review of our infrastructure project

docker run -it --rm \

-v $(pwd):/data \

-w /data \

-e AWS_ACCESS_KEY=<YOUR_ACCESS_KEY> \

-e AWS_SECRET_ACCESS_KEY=<YOUR_SCERET_KEY> \

hashicorp/terraform:light plan \

-var 'aws_access_key=<YOUR_ACCESS_KEY>' \

-var 'aws_secret_key=<YOUR_SCERET_KEY>'

You can notice we use the AWS keys two times but in different formats and the reason for this is because we are using Docker the first pair of keys are to set environment variables inside of the container and the others are part of the Terraform command to set variables for our plan, the setup of variables can be done in multiple ways but I like it to do it inline on the command.

After run this command it gives an output like this:

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

data.aws_ami.ubuntu: Refreshing state...

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ aws_instance.my-test-instance

id:

ami: "ami-0bbe6b35405ecebdb"

arn:

associate_public_ip_address:

availability_zone:

cpu_core_count:

cpu_threads_per_core:

ebs_block_device.#:

ephemeral_block_device.#:

get_password_data: "false"

instance_state:

instance_type: "t2.micro"

ipv6_address_count:

ipv6_addresses.#:

key_name:

network_interface.#:

network_interface_id:

password_data:

placement_group:

primary_network_interface_id:

private_dns:

private_ip:

public_dns:

public_ip:

root_block_device.#:

security_groups.#:

source_dest_check: "true"

subnet_id:

tags.%: "2"

tags.Name: "test-instance"

tags.Project: "terraform-tutorial"

tenancy:

volume_tags.%:

vpc_security_group_ids.#:

Plan: 1 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

Releasing state lock. This may take a few moments...

Basically, is a report of the changes that we are going to execute, if your infrastructure is big enough maybe you should output this to a file.

Terraform apply

The next command is going to execute our plan

docker run -it --rm \

-v $(pwd):/data \

-w /data \

-e AWS_ACCESS_KEY=<YOUR_ACCESS_KEY> \

-e AWS_SECRET_ACCESS_KEY=<YOUR_SCERET_KEY> \

hashicorp/terraform:light apply \

-var 'aws_access_key=<YOUR_ACCESS_KEY>' \

-var 'aws_secret_key=<YOUR_SCERET_KEY>'

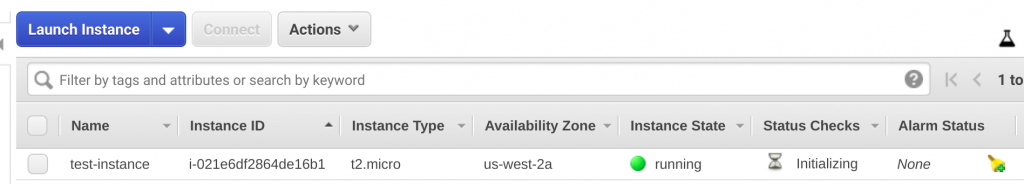

It depends of how big is our plan this can take a while but after a few moments we can go to AWS console and observe how our instance is being created.

Terraform destroy

Obviously, at some point we need to destroy our infrastructure and we need to learn how to do it.

docker run -it --rm \

-v $(pwd):/data \

-w /data \

-e AWS_ACCESS_KEY=<YOUR_ACCESS_KEY> \

-e AWS_SECRET_ACCESS_KEY=<YOUR_SCERET_KEY> \

hashicorp/terraform:light destroy \

-var 'aws_access_key=<YOUR_ACCESS_KEY>' \

-var 'aws_secret_key=<YOUR_SCERET_KEY>'

You should understand that if you run this command everything is going to be deleted and destroyed, Terraform will ask you for a confirmation and it will only accept the word yes as valid answer to proceed but use this command wisely.

This the output for destroy command:

Acquiring state lock. This may take a few moments... data.aws_ami.ubuntu: Refreshing state... aws_instance.my-test-instance: Refreshing state... (ID: i-021e6df2864de16b1) An execution plan has been generated and is shown below. Resource actions are indicated with the following symbols: - destroy Terraform will perform the following actions: - aws_instance.my-test-instance Plan: 0 to add, 0 to change, 1 to destroy. Do you really want to destroy all resources? Terraform will destroy all your managed infrastructure, as shown above. There is no undo. Only 'yes' will be accepted to confirm. Enter a value: yes aws_instance.my-test-instance: Destroying... (ID: i-021e6df2864de16b1) aws_instance.my-test-instance: Still destroying... (ID: i-021e6df2864de16b1, 10s elapsed) aws_instance.my-test-instance: Still destroying... (ID: i-021e6df2864de16b1, 20s elapsed) aws_instance.my-test-instance: Still destroying... (ID: i-021e6df2864de16b1, 30s elapsed) aws_instance.my-test-instance: Still destroying... (ID: i-021e6df2864de16b1, 40s elapsed) aws_instance.my-test-instance: Destruction complete after 49s Destroy complete! Resources: 1 destroyed. Releasing state lock. This may take a few moments...

If you review your AWS Console the instance must be gone.

Now you are able to create and destroy EC2 instances WITH CODE!!! This is the first step for a project with infrastructure as a code, at this moment our instance is useless because we it does not have installed anything and we can access to the console.

In the next tutorial I will show you how to configure the security groups, print the Elastic IP generated for our instance and install a few things inside of it.

Part 2. Adding security groups and printing information of the resources

Github repository